Understanding the Difference Between Website Indexing and Web Crawling

For website owners and SEO professionals, it is crucial to understand the difference between website indexing and web crawling. These two terms are often used interchangeably, but they refer to distinct processes that search engines employ to gather and store information about websites, allowing them to deliver relevant search results to users.

Website Crawling

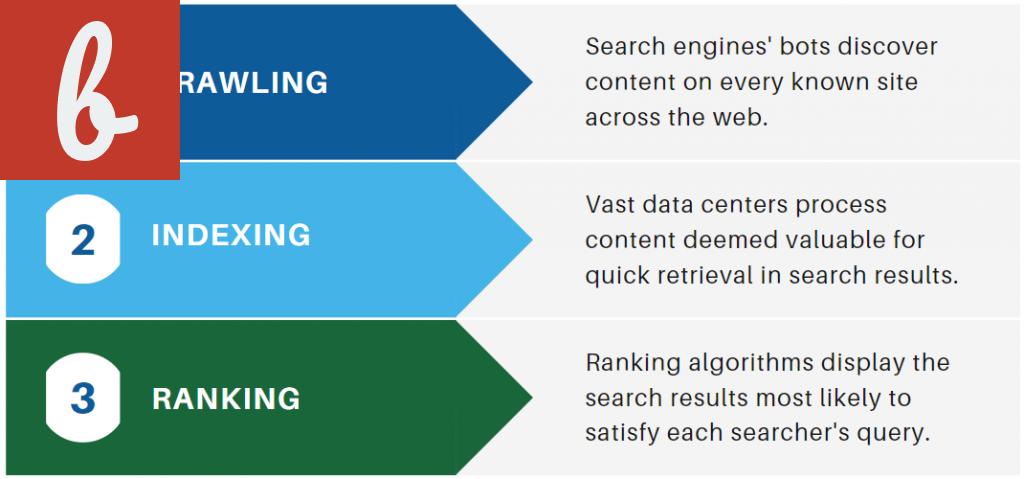

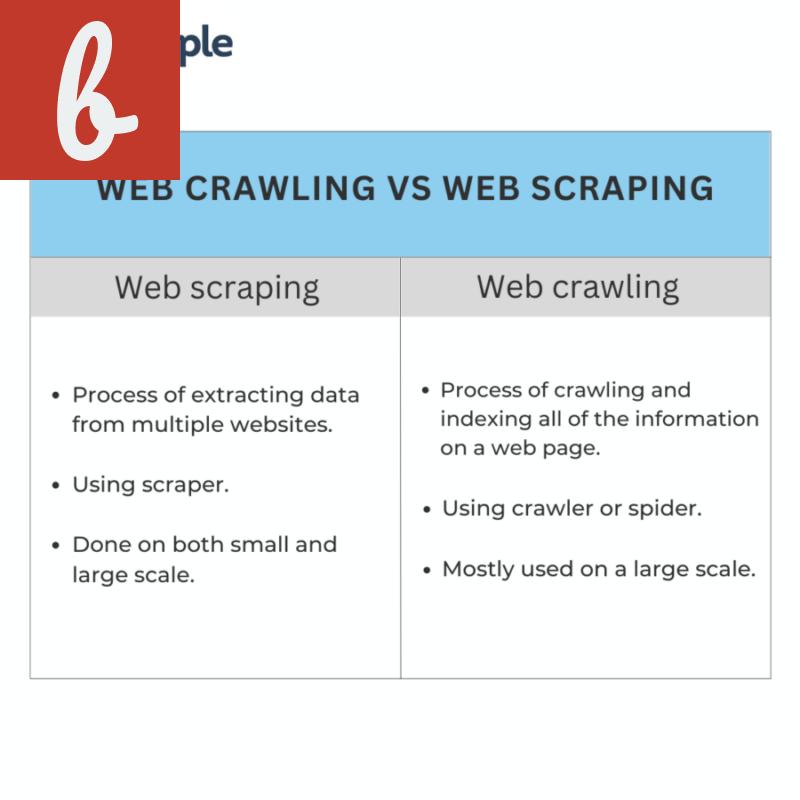

Web crawling is the initial step search engines take to discover and analyze web pages. Crawlers, also known as bots or spiders, are automated software programs that systematically navigate the internet, starting from a list of seed URLs.

During the crawling process, search engine crawlers follow links from one webpage to another, gathering information about each page they encounter. They analyze the page's content, structure, and metadata to determine its relevance and value. This information is then indexed and stored in the search engine's database for future retrieval.

Website Indexing

Website indexing is the process of organizing and storing the information obtained from web crawling. Once a search engine crawler collects data about a webpage, it indexes the page by creating a searchable record or entry for it in its database. This index allows the search engine to quickly retrieve relevant results based on user queries.

During the indexing process, the search engine parses the content of a webpage, extracts keywords and phrases, and assigns them relevant attributes such as page title, meta description, headers, and body text. These attributes help search engines understand the page's content and index it appropriately under relevant keywords.

- Key Differences Between Crawling and Indexing

- Crawling is the process of discovering and navigating web pages, while indexing is the process of storing and organizing the information gathered from those pages.

- Crawling is primarily concerned with finding new web pages and revisiting previously crawled pages to check for updates, while indexing focuses on analyzing the content and attributes of web pages.

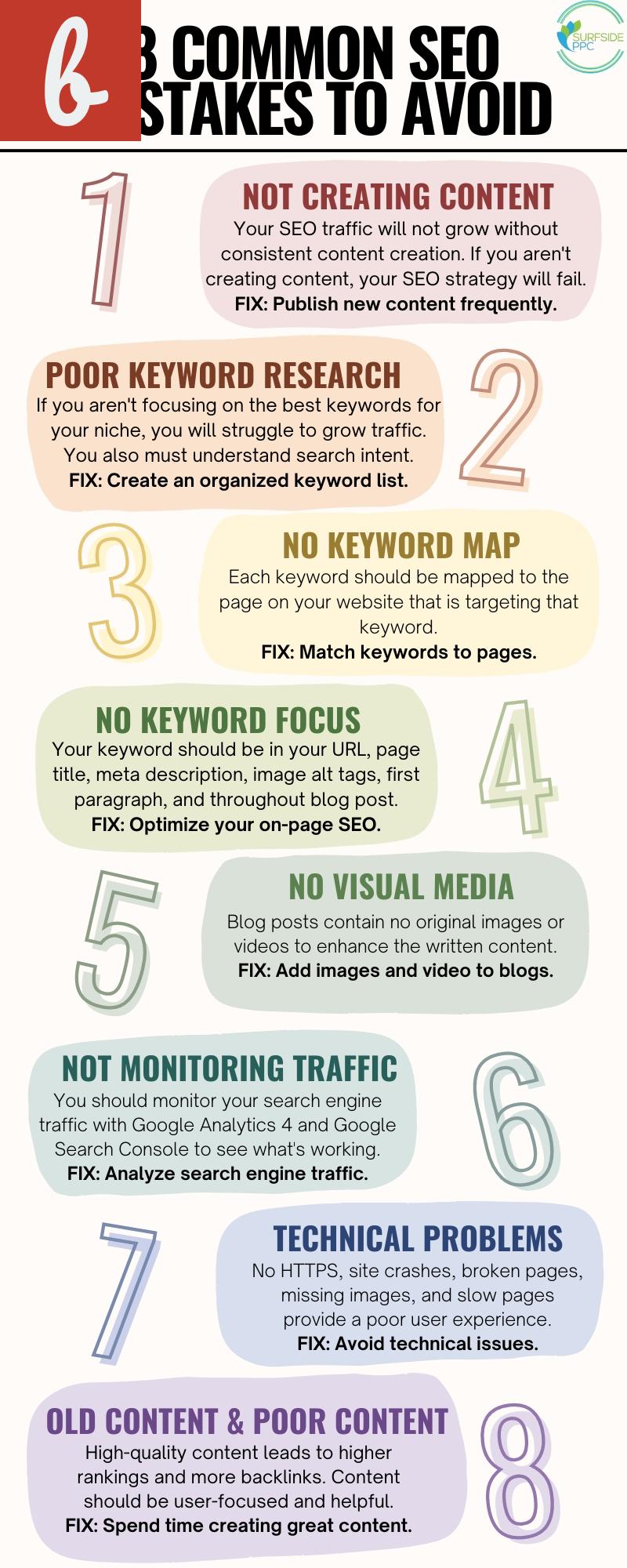

It's important to note that not all crawled web pages are indexed. Search engines use various algorithms and quality assessments to determine which pages to include in their index. Pages with high-quality content, proper technical optimization, and strong relevance to popular search queries are more likely to be indexed.

Conclusion

Understanding the difference between website indexing and web crawling is crucial for website owners and SEO professionals. While web crawling involves the discovery and analysis of web pages, website indexing is the process of organizing and storing the information gathered through web crawling. By comprehending these processes, individuals can optimize their websites to improve visibility and search engine rankings.

Main Title

When it comes to search engine optimization (SEO), two terms that often come up are website indexing and web crawling. While these terms are related, they have distinct meanings and functions within the realm of SEO.

Web crawling refers to the process where search engines send out bots or spiders to scour the internet for web pages. These bots follow links from one page to another, collecting information about the content and structure of each webpage they encounter. The data collected by these bots is then used by search engines to build their indexes.

Web crawling starts with a seed URL, which is the starting point. From there, the bots follow links, discover new pages, and add them to the search engine's index. Websites can make it easier for bots to crawl their webpages by optimizing their site structure and implementing techniques like XML sitemaps and robots.txt files.

- List item 1: Web crawling is essential for search engines to discover and index new content on the web.

- List item 2: Web crawling allows search engines to understand the structure of websites and the relationship between different webpages.

Website indexing, on the other hand, is the process where search engines store and organize the information collected during web crawling. It involves creating a searchable database of web pages and their content. Indexing makes it possible for search engines to retrieve relevant results quickly when users perform a search query.

During indexing, search engines analyze various factors such as keywords, meta tags, backlinks, and user behavior to determine the relevance and ranking of a webpage in search results. Websites can optimize their indexing by using appropriate meta tags, providing high-quality content, and ensuring their website is easily navigable.

Website indexing and web crawling are interconnected processes that are crucial for SEO. Without web crawling, search engines would not know about the existence of new web pages, and without website indexing, search engines would not be able to provide relevant search results to users.

In conclusion, web crawling is the initial step where search engines discover and collect information about web pages, while website indexing is the process of organizing and storing this information to facilitate quick and relevant search results. Both processes play a vital role in optimizing websites for search engine visibility and user accessibility.

Demystifying Website Indexing and Web Crawling: A Comprehensive Guide

Are you curious about how search engines work and how they are able to deliver relevant results? It all starts with website indexing and web crawling. In this comprehensive guide, we will walk you through the process of how search engines crawl and index websites, and why it is important for your website's visibility in search results.

Web crawling is the process in which search engines like Google and Bing send out bots, also known as crawlers or spiders, to discover and scan web pages. These bots follow links from one page to another, building a map of the internet.

When a crawler arrives at your website, it starts by fetching the content of your homepage and follows any links it finds on that page. As it navigates through your website, it gathers information about the pages it visits, such as the URL, content, and metadata. This information is then passed on to the search engine's indexing system.

Website indexing is the process of organizing and storing the information collected by the web crawler. It involves analyzing the content of each web page and cataloging it in the search engine's database. The index is essentially a massive library of web pages, ready to be searched and returned as results to user queries.

Now, you may be wondering why indexing is important for your website. Well, when your website is properly indexed, it significantly increases the chances of being discovered by search engine users. If search engines cannot find and index your website's pages, it will be invisible to potential visitors, resulting in low organic traffic and poor visibility in search results.

So, how can you ensure that your website is being properly crawled and indexed? Here are a few tips:

- Create a sitemap: A sitemap is a file that lists all the pages on your website, making it easier for search engines to discover and crawl your content. Submitting a sitemap to search engines can expedite the indexing process.

- Optimize your website structure: Make sure your website has a clear and organized structure with logical internal linking. This helps search engine crawlers to navigate and understand your website better.

- Use descriptive metadata: Metadata, such as title tags and meta descriptions, provides information about the content of a web page. Including relevant keywords in your metadata can help search engines understand the relevance of your website to specific search queries.

- Create high-quality, original content: Producing valuable and unique content is crucial for search engine visibility. Search engines prioritize websites that offer quality and relevant information to their users.

- Monitor crawl errors: Regularly check your website's crawl error report in the search console. Fix any crawl errors that may prevent search engines from accessing certain pages on your site.

In conclusion, website indexing and web crawling are integral processes that determine the visibility of your website in search engine results. By understanding how these processes work and implementing best practices, you can enhance your website's chances of being indexed and increase its visibility to potential visitors.

Website Indexing vs. Web Crawling: Unveiling the Contrasts

In the vast realm of the internet, website indexing and web crawling are two distinct processes that play a crucial role in how search engines gather and organize information. Although often used interchangeably, these terms actually refer to different aspects of the search engine optimization (SEO) landscape. Understanding the contrasts between website indexing and web crawling is essential for anyone aiming to enhance their online visibility. Let's explore the differences below:

Website Indexing: When search engines index a website, they essentially create a database or index of the website's content. This process involves analyzing and cataloging various aspects of a website, such as its web pages, images, metadata, and other relevant information. The purpose of website indexing is to make it easier and faster for search engines to retrieve and present relevant results when users search for specific keywords or phrases. By indexing a website, search engines can effectively understand and categorize its content.

Web Crawling: On the other hand, web crawling refers to the automated process of discovering and systematically scanning websites. Search engine bots, also known as spiders or crawlers, navigate through the web by following hyperlinks from one webpage to another. This crawling process allows search engines to find and retrieve new or updated content, which is then indexed for search engine result pages (SERPs). Essentially, web crawling is the initial step that enables search engines to gather data from websites.

- Website Indexing Key Points:

- Creation of a database or index of website content.

- Analysis and cataloging of web pages, images, metadata, etc.

- Facilitates faster and more accurate search engine results.

- Allows search engines to categorize and understand website content.

- Web Crawling Key Points:

- Automated process of discovering and scanning websites.

- Performed by search engine bots or crawlers.

- Enables search engines to find and retrieve new or updated content.

- Essential for keeping search engine indexes up-to-date.

It's important to note that although indexing and crawling are separate processes, they are interconnected and occur simultaneously. Web crawling enables search engines to discover and retrieve new content, which is then indexed for efficient retrieval in search results. This continuous cycle ensures that search engines have the most up-to-date and relevant information available for users.

Conclusion: Website indexing and web crawling are integral components of how search engines gather, organize, and present information. Website indexing involves the creation of an index or database of website content, while web crawling is the process of automatically discovering and scanning websites. By understanding the distinctions between website indexing and web crawling, website owners and SEO practitioners can optimize their strategies to improve online visibility and search engine rankings.

Understanding the Key Differences: Website Indexing vs. Web Crawling

When it comes to search engine optimization (SEO), it is crucial to understand the key distinctions between website indexing and web crawling. Both processes play a vital role in ensuring that your website and its content are discoverable by search engines. Let's explore these concepts further and uncover their significance in optimizing your online presence.

Website Indexing: Website indexing is the process of adding web pages into a search engine's database or index. It is like building a library catalog that enables search engines to quickly find and retrieve relevant information when a user initiates a search query. During the indexing process, search engines analyze the web page's content, meta tags, and other factors to understand its relevance and assign it a position within the search results.

Indexing relies on search engine bots, also known as spiders or crawlers, which systematically navigate through multiple websites and web pages. These bots analyze the content and structure of each web page they encounter and then store the information in the search engine's database. When a user searches for specific keywords, the search engine retrieves relevant web pages from its index and displays them in the search results.

- List item 1: To ensure that your website gets indexed promptly, it is essential to submit your website's sitemap to search engines. A sitemap is a file that contains a list of all the pages on your website, allowing search engine bots to discover and index them more effectively.

- List item 2: Creating high-quality and relevant content is crucial for website indexing. When search engine bots crawl your site and find valuable content, they are more likely to index it and show it in the search results.

Web Crawling: Web crawling, also known as web spidering or web data extraction, is the process by which search engine bots systematically navigate through websites and web pages to gather information. These bots follow links from one page to another, collecting data on the content, structure, and other elements of each web page.

During the crawling process, search engine bots discover new web pages, update existing pages, and identify broken links. This continuous crawl ensures that search engines have the most up-to-date information about your website. However, it's important to note that not all crawled pages are indexed. The indexing process evaluates the relevance and quality of a web page before including it in the search engine's index.

Conclusion:

In the realm of SEO, understanding the distinctions between website indexing and web crawling is essential. While web crawling involves the systematic exploration of websites by search engine bots, website indexing refers to the process of adding web pages to a search engine's index. By focusing on creating high-quality content, submitting sitemaps, and ensuring a crawlable website structure, you can optimize your chances of getting your website indexed and gaining visibility in search engine results.

Comments on "Website Indexing Vs. Web Crawling: What's The Difference? "

No comment found!